Professional learning communities have a track record of helping teachers make sense of student performance data, but they can — and should — do more to support meaningful changes in teaching practice.

Teachers working in collaborative teams — most frequently labeled professional learning communities (PLCs) or data teams — have become ubiquitous in K-12 education. And when such teams are implemented with fidelity and supported with adequate resources, they tend to lead to significant improvements in student performance (DuFour, Eaker, & DuFour, 2005).

PLCs most often are used to support teachers in analyzing student performance data, both formative and summative, and rethinking their learning needs. Typically, PLC members begin by collecting baseline data in a particular area where students appear to be struggling, and then they help each other define specific goals for student improvement and measures of progress toward reaching them. However, our research suggests that PLCs tend to devote little attention to a third topic, perhaps the most important one of all: how team members would have to change their teaching practices to reach those goals. In recent observations of more than 100 data teams, my colleagues and I found that as much of 90% of the time was focused on students’ learning and goals for improvement, and we saw very little discussion at all — much less rigorous, data-driven discussion — of instructional strategies that teachers were designing or trying to implement.

After reviewing classroom data and recalibrating their goals for learning, PLC members may very well see positive changes in student performance. But if they have made no serious effort to collect data on or analyze their own teaching practices, then how can they make informed judgments about why their students improved? Just as important, why would PLCs waste this tremendous opportunity to help teachers find out precisely what is and is not working in their classrooms? The problem, as Douglas Reeves (2015) has noted, is that this leaves teachers with an abundance of information about effects (i.e., data on student performance) but a dearth of information about causes (i.e., data on adult practices).

Our observations are entirely consistent with those made by Richard DuFour, who developed the PLC process and has worked with it extensively:

Too often, I have seen collaborative teams engage in the right work . . . up to a point. They create a guaranteed and viable curriculum, they develop common formative assessments, and they ensure that students receive additional time and support for learning through the school’s system of intervention. What they fail to do, however, is use the evidence of student learning to improve instruction. They are more prone to attribute student’s difficulties to the students themselves. If their analysis leads them to conclude that, “students need to study harder” or “students need to do a better job with their homework assignments” or “students need to learn how to seek help when they struggle to grasp a concept,” they have the wrong focus. Rather than listing what students need to do to correct the problem, educators need to address what they can do better collectively. To engage fully in the PLC process, a team must use evidence of student learning to inform and improve professional practice of its members (DuFour, 2015).

Balancing the data scales

Over the past 10 years, we have worked with teams of teachers from all grade levels — and from a range of urban, suburban, and rural districts — to move the collaborative process from a singular focus on student performance data to a more balanced approach that combines student performance data with adult practice data.

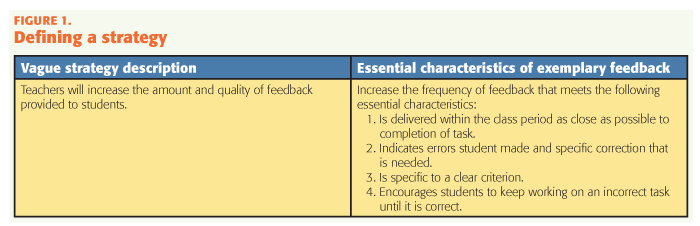

To an extent, our work resembles the typical PLC process: We begin with an analysis of data relevant to a significant student performance issue (academic or behavioral). This involves determining the current level of performance, setting a measurable goal for improvement, and choosing interim measures of progress. However, when it comes time to select a strategy or particular actions for PLC members, we ask the team to devote significant time to identifying specific, research-supported practices and describing, in measurable terms, what exemplary implementation would look like. In short, rather than allowing the team to be satisfied with a vague statement about how they plan to change what they do in the classroom, we insist on concrete details.

These teachers are designing and implementing their own professional development, focusing on an issue that they have identified as important to them.

The key to this process lies in identifying precisely what counts as exemplary implementation. Why “exemplary”? First, because if students are struggling, then mediocre implementation likely will not move them to high levels. Further, if we neglect to define exactly what it means to put the strategy into practice, then we will have no way to make sense of any results that disappoint us — was the strategy flawed, we’ll wonder, or was it a good strategy poorly implemented? Nor, for that matter, will we be able to compare what we observe in different classrooms since every teacher might have a different idea of what it means to implement the given approach (Graff-Ermeling, Ermeling, & Gallimore, 2015).

Thus, we ask teachers to decide on a limited number of factors that they can use to determine whether they are implementing the given strategy in more or less the same way. That is, we aim for a sweet spot, ensuring some consistency of implementation while still letting individual teachers bring their own style and creativity to the classroom.

Recently, for example, we worked with a group of teachers at Northwest Middle School (not the real name) who were concerned about the amount of reteaching they did to help students reach mastery of classroom material. After studying and reflecting on performance data, they came to a hypothesis: They were giving students vague and infrequent feedback on their work, which left them unsure about their understanding of the content. In short, the team was able to zero in on a particular aspect of classroom practice. They identified a specific solution — giving students more detailed feedback on their work on a more regular basis — that they can implement in their classrooms and that can be boiled down to a number of measurable but not terribly prescriptive practices (such as responding to each student task within the same class period or using rubrics or other explicit criteria that show students precisely how they can improve). (See Figure 1.)

Measuring implementation

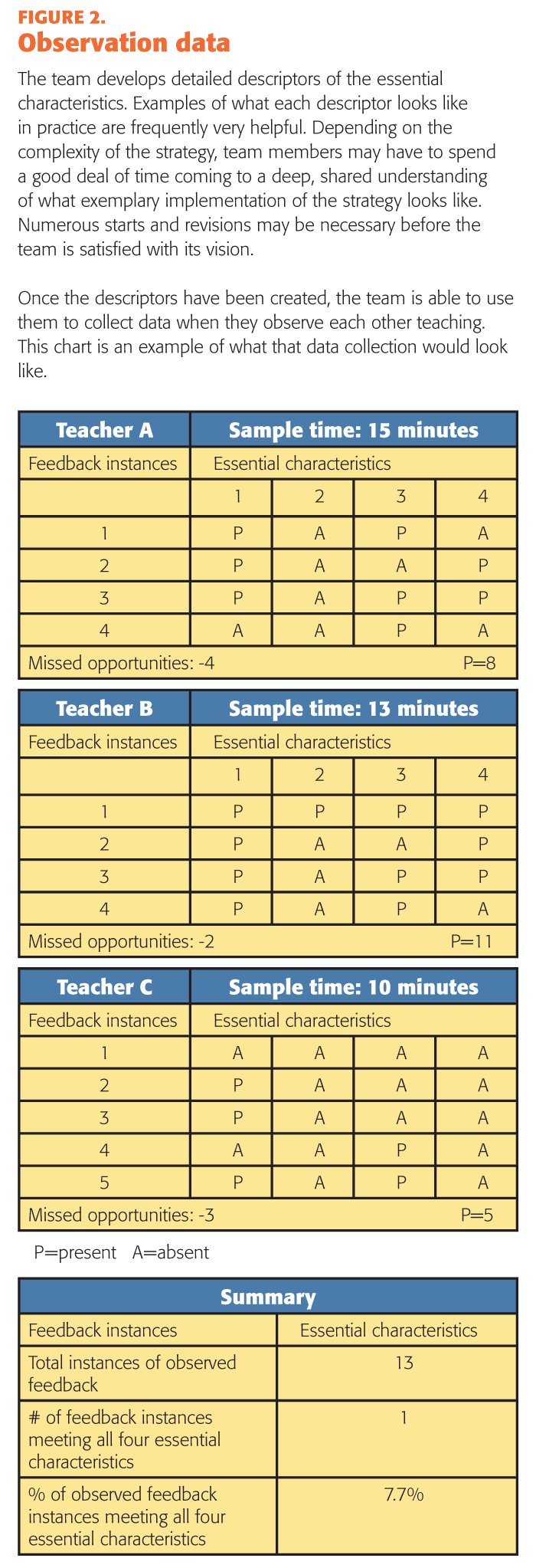

Armed with a clear description of a specific classroom strategy, the team can then agree on shared criteria to determine whether they are implementing it successfully and consistently. Then, they can collect data by observing one another teach — either live or on video — and keeping track of how often they see a particular practice being applied (or not applied when it could have been). The team must decide how many observations to conduct, but in this case the metric is a simple one: the percentage of observed instances of feedback containing all four essential characteristics that the group has specified.

For example, the team at Northwest Middle School decided that each member would prepare a video of a 10- to 15-minute slice of instruction:

In the example above, the baseline is 7.7% — that is, of the 13 observed instances of feedback given to students, only one met all four criteria the team identified as necessary to be considered exemplary (see Figure 2). On a closer look, though, some interesting details emerge. Note, for instance, that Teacher B scored considerably higher than the other team members, suggesting that the group may want to treat the teacher as a go-to person for advice on this teaching practice. This sort of thing happens quite often in PLCs. Further, it appears that characteristic #2 presents a challenge for the whole group, which means that it may be worth a closer look. Perhaps team members will want to clarify their definition of this practice, or maybe they simply don’t have much experience giving students this kind of feedback. Note, also, that there were nine instances where the observer saw a missed opportunity to provide students with feedback, suggesting another possible topic for discussion.

While it may be alarming to teachers to get what seems like a low baseline score on their measure, they ought to see it as good news. After all, the team has identified a research-based teaching strategy that, if implemented well, has been shown to have a positive effect on student performance. They now have evidence that they are not implementing this strategy very well or very often, and they should be hopeful that as their implementation improves, so, too, will student performance.

At this point, the team is ready to define a specific goal for a change in classroom practice:

By June 2016, the percentage of teachers on the grade 6 team implementing the feedback strategy at the exemplary level (as defined by the team’s Standards for Exemplary Feedback) will increase from 7.7% to 90%.

Further, the team also can create a schedule for collecting data, which will let members document their own changing practice and students’ change in performance. (See Figure 3.)

Ongoing work of the team

Meeting on a regular basis, the team members’ primary task is to determine how they will all reach the goal they’ve set for their practice. Strategies we have seen teams use include:

• Observe the most proficient practitioner;

• Prepare and deliver mock lessons to the team;

• Research video examples of exemplary implementation;

• Bring in an outside expert; and

• Video classroom samples and review/critique as a team.

In effect, these teachers are designing and implementing their own professional development, focusing on an issue that they have identified as important to them. Over time, they will monitor both data streams — the change in their practice and the change in student performance — and these sources of information will guide the direction of their work.

No doubt, this can be a time-consuming and difficult process, one that challenges teachers to rethink their professional norms and identities. That being said, it has been our experience that given sufficient time and support (see Conditions for Optimizing Success, p. 71), classroom teachers can analyze very complex instructional practices, create data schemes for measuring these practices, design improvement plans, and monitor their effect on the development of important student behaviors. (For new evidence about the value of such teacher leadership, see Brenneman, 2016). This work places high expectations on teachers, and this is as it should be. We cannot demand high expectations of students if we don’t first place high expectations on the adults charged with educating them.

References

Brenneman, R. (2016). Researchers say U.S. schools could learn from other countries on teacher professional development. Education Week, 35 (19).

DuFour, R. (2015). How PLCs do data right. Educational Leadership, 73 (3), 23-26.

DuFour, R., Eaker, R., & DuFour, R. (2005). On common ground: The power of professional learning communities. Bloomington, IN: Solution Tree Press.

Graff-Ermeling, G., Ermeling, B.A., & Gallimore, R. (2015). Words matter: Unpack the language of teaching to create shared understanding. JSD, 36 (6).

Reeves, D. (2015). Next generation accountability. Boston, MA: Creative Leadership Solutions. www.creativeleadership.net

Originally published in February 2017 Phi Delta Kappan 98 (5), 67-71. © 2017 Phi Delta Kappa International. All rights reserved.

ABOUT THE AUTHOR

Michael J. Wasta

MICHAEL WASTA is an education consultant based in West Hartford, Conn., and affiliated with Creative Leadership Solutions, Boston, Mass., and author of Harnessing the Power of Teacher Teams .