To assess the credibility of the information they find online, students shouldn’t start with a close reading of the given website. Rather, they should turn to the power of the web to determine its trustworthiness.

After the 2016 election, the nation’s most prominent news organizations launched efforts to help Americans navigate the deluge of information — much of it false or misleading — that floods their phones, tablets, and laptops. “Here’s how to outsmart fake news in your Facebook feed,” blared a CNN headline (Willingham, 2016). The Washington Post provided “the fact checker’s guide for detecting fake news” (Kessler, 2016), and the Huffington Post instructed readers on “how to recognize a fake news story” (Robins-Early, 2016). And the response went well beyond the publication of how-to guides. From the campuses of Silicon Valley tech giants to the halls of the U.S. Capitol, corporate leaders and policy makers set out to decipher digital misinformation’s role in the election. Google, Facebook, and Twitter all announced initiatives to combat the scourge of online deceit. Congressional committees convened hearings. Countless articles, cable news segments, and blog posts dissected the problem.

As this storm churned, our research group released findings that showed the problem was much broader than fake news, particularly when it comes to young people’s ability to make sense of the information they encounter online (McGrew et al., 2018; McGrew et al., 2017). Over the course of 18 months, we collected 7,804 responses to tasks that required students to evaluate online content, ranging from having them evaluate Facebook posts to asking them to find out who was behind partisan websites. Students of all ages struggled mightily. Middle school students mistook advertisements for news stories. High schoolers were unable to verify social media accounts. College students blithely accepted a website’s description of itself. The reaction to our report was immediate and overwhelming. Journalists, librarians, professors, tech executives, and educators from around the world contacted us. One question kept coming up: How can we help students do better?

Across the globe, efforts are now underway to address this question. In Canada, for example, Google provided $500,000 to fund the development of curriculum for 1.5 million students from ages nine to 19 (The Canadian Press, 2017). The Italian government is working with Facebook and national broadcasters on a new initiative to provide students in 8,000 high schools with instruction on how to identify apocryphal news stories (Horowitz, 2017). State legislatures across the United States have proposed and, in some cases, passed legislation to support media literacy instruction for students (Media Literacy Now, 2017).

What’s wrong with checklists

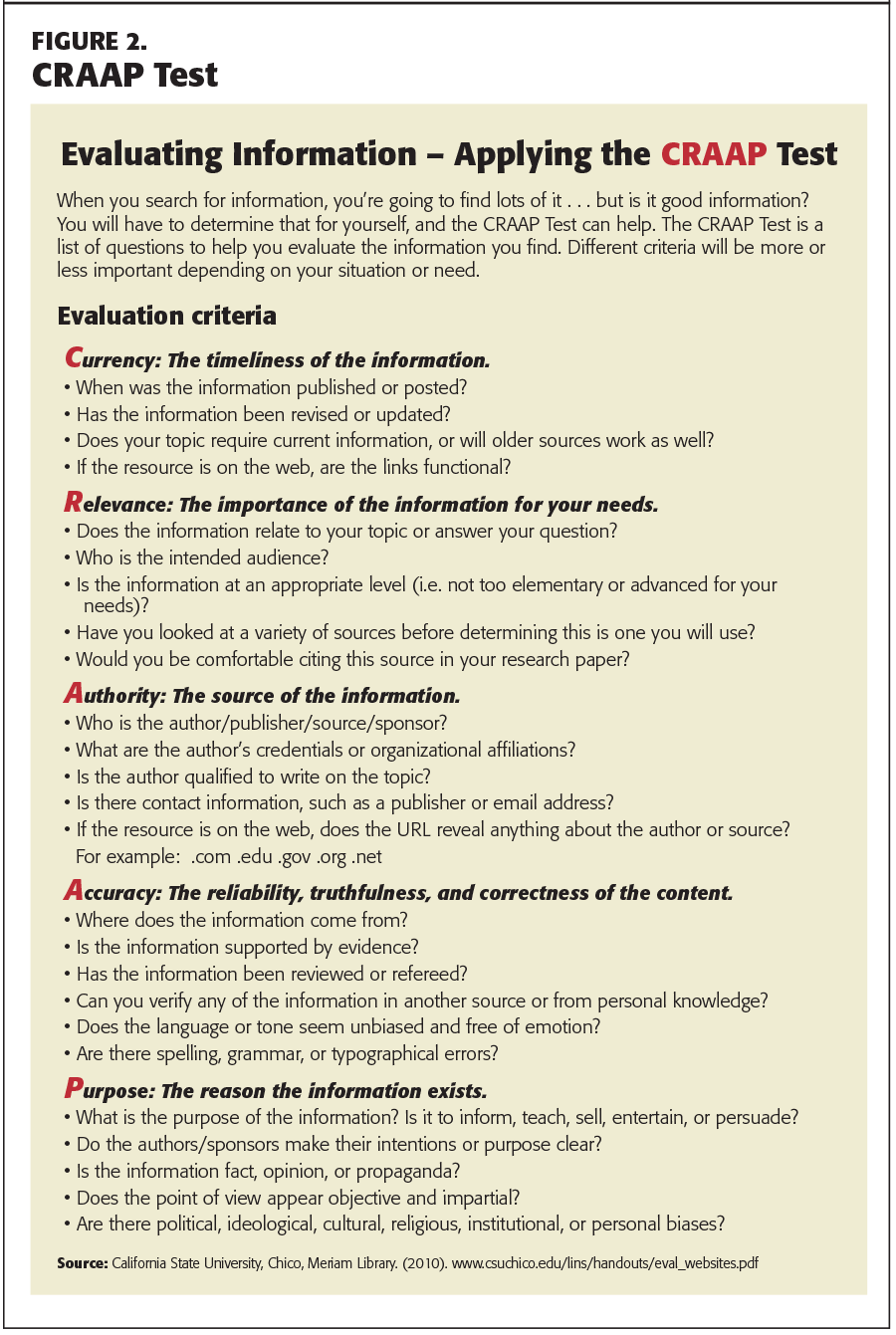

Although we face a digital challenge, educators have relied on a distinctly analog approach to solving it. The most prominent digital literacy organizations in the United States and Canada instruct students to evaluate the trustworthiness of online sources using checklists of 10 to 30 questions. (Common Sense Media, the News Literacy Project, Canada’s Media Smarts, the University of Rhode Island’s Media Education Lab, and the American Library Association all disseminate website evaluation checklists.) Such lists include questions like: Is a contact person provided? Are the sources of information identified? Is the website a .com (supposedly bad) or a .org (supposedly good)?

It’s easy to understand the appeal of such checklists. They are simple-to-use tools intended to support students in an area where we know they need help. On the other hand, as far as we can tell, none of the checklists is based on research about what skilled people actually do when facing a computer screen. In fact, checklists may lead students astray.

In our research, we observed a group of fact-checkers from the nation’s most prestigious news outlets as they completed online tasks (Wineburg & McGrew, 2017). None of these fact-checkers used such a list. In fact, none came close to asking the kinds of questions recommended by checklists. When confronted by new information on an unfamiliar website, fact-checkers almost instantaneously left the site and read laterally — opening up new browser tabs and searching across the web to see what they could find about the trustworthiness of the source of information. Only after examining other sites did they return to read the material on the original site more closely.

The fact-checkers’ approach differed sharply from that of the college students and professors who we also studied. The students (Stanford undergraduates) and professors (from a variety of universities) read vertically, wasting precious minutes evaluating the information within the original site before trying to figure out who was behind it. Both groups often fell victim to easily manipulated features of websites, such as official-looking logos and domain names. In comparison, fact-checkers read less but learned more — often in a fraction of the time (Wineburg & McGrew, 2017).

The fact-checkers’ strategy reveals the shortcomings of the checklist approach. For example, imagine if a group of students wanted to determine whether it is sound public policy to raise the minimum wage to $15 an hour. An online search might lead to minimumwage.com (see Figure 1), which features a sleek layout and “Research” and “Media” pages that link to scholarly reports and news articles. According to its “About” page, minimumwage.com is a project of the Employment Policies Institute, “a non-profit research organization dedicated to studying public policy issues surrounding employment growth” (minimumwage.com, n.d.).

To evaluate the trustworthiness of any given website, the checklist approach instructs students to consider questions such as: Who is the intended audience? Are there spelling errors? When was the information published? Do pages load quickly? What the checklists omit, however, is the first move that the fact-checkers made: Look to the internet to see what other sources say about the site.

Were students to read laterally about the Employment Policies Institute, they would find articles that shed light on the nature and purpose of the organization. They might very well come across Salon’s headline “Corporate America’s new scam: Industry P.R. firm poses as think tank” (Graves, 2013) or a New York Times article detailing how the “Fight over minimum wage illustrates web of industry ties” (Lipton, 2014). In both cases, they would learn that EPI is a front group funded by Berman and Co., a Washington, D.C., public relations firm that works on behalf of the food and beverage industry, which, as might be expected, opposes raising the minimum wage. According to the New York Times, the firm’s owner, Richard Berman, has a track record of creating “official-sounding nonprofit groups” to disseminate information on behalf of corporate clients.

Now consider the conclusions students would draw about minimumwage.com if they used one of the most widely disseminated checklists. The CRAAP Test, created by Meriam Library at California State University, Chico (2010), instructs users to consider the currency, relevance, authority, accuracy, and purpose of a website (see Figure 2). The CRAAP Test and its variations appear on dozens of library websites from Florida to Alaska; the American Library Association promotes its use (Alaska State Libraries, Archives, and Museums, 2017; Alvin Sherman Library, n.d.; Crum, 2017); and it was featured in the November 2017 issue of Kappan. How does minimumwage.com fare on the CRAAP test?

- Currency: The site’s home page features regular updates, has a 2018 copyright, and includes many functional links to other sites.

- Relevance: If a student is seeking information about minimum wage policy, the site is filled with relevant information, including details about minimum wage in every state and links to research from outlets ranging from the Harvard Business School to the New York Times.

- Authority: The site’s “About” page indicates that it is a project of the Employment Policies Institute. EPI provides a telephone number, an email address, and a street address inWashington, D.C. To further burnish its credentials, EPI’s “About” page describes the organization’s work: “EPI sponsors nonpartisan research which is conducted by independent economists at major universities around the country” (Employment Policies Institute, n.d.).

- Accuracy: The website is free of spelling, grammatical, and typographical errors. More important, it supplies a host of data sources to support its claims. From links to university research to polished reports produced by EPI, the site creates the impression of academic rigor. A careful reader of the site will identify clues that the site is opposed to raising the minimum wage. However, it attempts to do so in the detached tone of science, backed up by data-stuffed research reports.

- Purpose: According to the site, EPI’s purpose is “studying public policy issues surrounding employment growth” (minimumwage.com,n.d.). Although it is difficult to identify its true purpose, one could conclude that the site opposed increasing the minimum wage on the basis of data from disinterested economists and policy experts.

In short, minimumwage.com performs swimmingly on the CRAAP Test. The site passes other website checklists with flying colors as well.

Perhaps these lists were useful when students accessed the internet using a dial-up modem. But they are less effective in an age when anyone can publish a sleek website with a $25 template. The checklist approach did not anticipate an internet populated by websites that cunningly obscure their true backers: corporate-funded sites posing as grassroots initiatives (a practice commonly known as astroturfing); supposedly nonpartisan think tanks created by lobbying firms, and extremist groups mimicking established professional organizations. By focusing on features of websites that are easy to manipulate, checklists are not just ineffective but misleading. The internet teems with individuals and organizations cloaking their true intentions. At their worst, checklists provide cover to such sites.

Further, lengthy lists of questions (the CRAAP Test includes 25 items, for example) are impractical. It’s unrealistic to believe that kids (or any of us, for that matter) will have the patience to go through long lists of questions for every unfamiliar site they encounter.

Many students seem to have internalized a set of checklist-like criteria, though. We asked 197 undergraduates whether minimimumwage.com was a reliable source of information about the minimum wage and allowed them to search anywhere on the web. Only 5% identified Berman and Co. as responsible for the website. We also gave this task to 95 11th graders enrolled in an Advanced Placement United States History course. The results were similar to those from college students. A mere 10% were able to correctly identify the organization behind minimumwage.com. Students often answered on the basis of issues addressed by checklists. For example, one college student zeroed in on the currency of the information: “It is reliable because the date of one article is 2014, which is still relatively new, meaning the information is probably still up-to-date regarding minimum wage.” Another evaluated the site’s authority: “Yes, it is a reliable source. The research is being conducted by experts or independent economists from major universities.” Other students seem to have taken to heart the recommendation, included in many checklists, to trust .org websites more than .com URLs.

As one wrote: This is a reliable source of information. It is nonpartisan, so it isn’t biased toward a certain political view. Furthermore, it works with major universities, so it must be credible enough to work with universities. The organization it belongs to, EPI, is a nonprofit organization and has a .org website address.

Instead of looking into who produced the website, the student evaluated irrelevant features of minimumwage.com and the EPI website and imputed special power to the .org URL. Yet, .org lost its cachet long ago; it is easy for all kinds of dubious organizations to obtain .org domain names.

A serious problem

The consequences of failing to prepare students to evaluate online material are real and dire. The health of our democracy depends on our access to reliable information, and increasingly, the internet is where we go to look for it — young people report that they get 75% of their news online (American Press Institute, 2015). For every important social and political issue, there are countless groups seeking to gain influence, often obscuring their true backers. If students are unable to identify who is behind the information they encounter, they are easy marks for those who seek to deceive them.

The health of our democracy depends on our access to reliable information, and increasingly, the internet is where we go to look for it.

Given the stakes, we must act decisively to equip students for the present digital landscape. We need to teach them to move beyond the surface features of a site and give them tools to determine who is behind the information they consume. Our research with fact-checkers provides guidance for better approaches to evaluating online content.

To start, fact-checkers read laterally. They scan unfamiliar sites strategically and then quickly leave these sites in search of information about their credibility. Rather than take sites at face value, they look elsewhere for reliable information that can shed light on the original sites. Students should learn to be equally strategic. We should teach them that when they reach an unfamiliar site — even one with a polished look — they should open a new browser window and find out what other sources say.

- Related: Arming students against bad information

- Related: Teaching with evidence in the age of fake news

- Related: Literacy instruction for life online

Skilled teachers already use modeling to show students how to analyze Shakespearean sonnets or evaluate scientific claims. We must do the same with strategies for evaluating information online. Students need to see how skilled readers evaluate deceptive sites like minimumwage.com, and they need to have structured opportunities to practice this approach. (Sadly, there is no shortage of cloaked websites for students to investigate as they hone their evaluation skills.) To be sure, teaching students to practice lateral reading is no silver bullet, but it is a practical strategy that students can immediately implement when navigating the digital swamp.

We can also teach students to be more discerning consumers of search engine results. Many students trust that Google puts the most reliable sources at the top of the search results, and if they bother to look beyond the first few entries, they tend to click haphazardly. In contrast, the fact-checkers in our study displayed click restraint. Students need to learn how to decipher the snippets that accompany each search result, and to make a judicious choice before clicking on any one.

We can show students how search engines generate results and encourage them to think about why they choose to navigate to particular sites. Teachers can model ways to think about the information that search engines provide, dispelling common misconceptions along the way. For example, students often reflexively reject search results from Wikipedia because they’ve been taught that anyone can edit the entries. In practice, though, Wikipedia can be a useful starting place for online research, and the most heavily trafficked pages on Wikipedia can only be altered by a select number of Wikipedia editors.

Educators should understand, though, that teaching students to be careful consumers of online information will require a team effort and substantial amounts of time. It’s not enough to have the librarian teach a one-off lesson on the subject. Rather, to become adept at distinguishing between credible and spurious information, students will need reinforcement from across the curriculum. Science instructors must help them contend with websites created by anti-vaxxers and climate change deniers; English teachers must help them recognize and respond to online propaganda; math teachers must show them how not to be fooled by the misuse of polling data and statistics of all kinds; history teachers must help them distinguish between pseudo-scholarly arguments anchored with footnotes to nonexistent evidence and articles that have gone through rigorous peer review.

The challenge we face goes beyond students. Too many teachers regard their students as the experts, mistaking students’ fluency with digital devices for sophistication at judging the information such devices yield. Given the scale of the problem facing our democracy, teachers must be provided professional development about how to evaluate online information. Teachers also need instruction in how to integrate these new digital strategies into their classrooms and time to plan with colleagues in other subjects and grade levels to ensure integration across the curriculum.

We are not the first to call the checklist approach into question (Caulfield, 2016; Meola, 2004). However, the issue is now more pressing than ever. The aftermath of the 2016 election drew unprecedented attention to media literacy and spurred widespread support for initiatives funded by technology giants. If these efforts are to create positive change, they must move beyond stale curriculum materials of the past and embrace strategies that address the threats in our digital present.

References

Alaska State Libraries, Archives, and Museums. (2017, September 17). Digital literacy: Alaska Knowledge Center: About Internet. Retrieved from http://lam.alaska.gov/digitalliteracy/internet

Alvin Sherman Library, Research, and Information Technology Center, Nova Southeastern University. (n.d.). Evaluate sources — CRAAP test. http://nova.campusguides.com/evaluate

American Press Institute. (2015). How Millennials get news: Inside the habits of America’s first digital generation. www.americanpressinstitute.org/publications/reports/survey-research/millennials-news

Caulfield, M. (2016, December 19). Yes, digital literacy. But which one? Hapgood. https://hapgood.us/2016/12/19/yes-digital-literacy-but-which-one

Crum, M. (2017, March 9). After Trump was elected, librarians had to rethink their system for fact-checking. Huffington Post.

Employment Policies Institute. (n.d.). About Us. www.epionline.org/aboutepi

Graves, L. (2013, November 13). Corporate America’s new scam: Industry P.R. firm poses as think tank! Salon. www.salon.com/2013/11/13/corporate_americas_new_scam_industry_p_r_firm_poses_as_think_tank

Horowitz, J. (2017, October 18). In Italian schools, reading, writing and recognizing fake news. New York Times.

Kessler, G. (2016, November 22). The fact checker’s guide for detecting fake news. Washington Post.

Lipton, E. (2014, February 9). Fight over minimum wage illustrates web of industry ties. New York Times.

McGrew, S. Breakstone, J., Ortega, T., Smith, M., & Wineburg, S. (2018). Can students evaluate online sources? Learning from assessments of Civic Online Reasoning. Theory & Research in Social Education. doi: 10.1080/00933104.2017.1416320

McGrew, S. Ortega, T., Breakstone, J., & Wineburg, S. (2017). The challenge that’s bigger than fake news: Civic reasoning in a social media environment. American Educator, 41 (3), 4-11.

Media Literacy Now. (2017, July 17). Putting media literacy on the public policy agenda. https://medialiteracynow.org/your-state-legislation

Meola, M. (2004). Chucking the checklist: A contextual approach to teaching undergraduates web-site evaluation. Libraries and the Academy, 4 (3), 331-344.

Meriam Library, California State University, Chico. (2010, September 17). Evaluating Information — Applying the CRAAP Test. www.csuchico.edu/lins/handouts/eval_websites.pdf

Minimumwage.com. (n.d.). About Minimumwage.com. www.minimumwage.com/about

Robins-Early, N. (2016, November 22). How to recognize a fake news story. Huffington Post.

The Canadian Press. (2017, September 19). Google funds teaching Canadian students to spot fake news. Toronto Sun.

Willingham, A. J. (2016, November 18). Here’s how to outsmart fake news in your Facebook feed. CNN. www.cnn.com/2016/11/18/tech/how-to-spot-fake-misleading-news-trnd

Wineburg, S. & McGrew, S. (2017). Lateral reading: Reading less and learning more when evaluating digital information (Stanford History Education Group Working Paper No. 2017-A1). https://ssrn.com/abstract=3048994

Originally published in March 2018 Phi Delta Kappan 99 (6), 27-32. © 2018 Phi Delta Kappa International. All rights reserved.

ABOUT THE AUTHORS

Joel Breakstone

JOEL BREAKSTONE is director of the Stanford History Education Group .

Sarah McGrew

Sarah McGrew is an assistant professor at the University of Maryland College of Education, College Park.

Teresa Ortega

TERESA ORTEGA is a project manager at Stanford History Education Group , Stanford University, Palo Alto, Calif.

Mark Smith

MARK SMITH is director of assessment at the Stanford History Education Group at Stanford University, Palo Alto, Calif.

Sam Wineburg

SAM WINEBURG is the Margaret Jacks Professor of Education and History at Stanford University, Palo Alto, CA. He is the author of Why Learn History .